In Liverpool, England, at a February 2020 conference on the rather unglamorous topic of government purchasing, attendees circulated through exhibitor and vendor displays, lingering at some, bypassing others. They were being closely watched. Around the floor, 24 discreetly positioned cameras tracked each person’s movements and cataloged subtle contractions in individuals’ facial muscles at five to 10 frames per second as they reacted to different displays. The images were fed to a computer network, where artificial-intelligence algorithms assessed each person’s gender and age group and analyzed their expressions for signs of “happiness” and “engagement.”

About a year after the Liverpool event, Panos Moutafis, CEO of Austin, Tex.–based Zenus, the company behind the technology, was still excited about the results. “I haven’t seen lots of commercial systems getting this level of accuracy,” he said to me during a video call, showing me a photograph of the crowd, the faces outlined with boxes. Zenus engineers had trained the system to recognize emotions by having it examine a huge data set of facial expressions with labels describing relevant feelings. The company validated the program’s performance in various ways, including live tests when people reported how they felt when an image was taken. The system, Moutafis said, “works indoors, it works with masks, with no lighting, it works outdoors when people wear hats and sunglasses.”

The Zenus setup is one example of a new technology—called emotion AI or affective computing—that combines cameras and other devices with artificial-intelligence programs to capture facial expressions, body language, vocal intonation, and other cues. The goal is to go beyond facial recognition and identification to reveal something previously invisible to technology: the inner feelings, motivations and attitudes of the people in the images. “Cameras have been dumb,” says A.C.L.U. senior policy analyst Jay Stanley, author of the 2019 report The Dawn of Robot Surveillance. “Now they’re getting smart. They are waking up. They are gaining the ability not just to dumbly record what we do but to make judgments about it.”

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Emotion AI has become a popular market research tool—at another trade show, Zenus told Hilton Hotels that a puppies-and-ice-cream event the company staged was more engaging than the event’s open bar—but its reach extends into areas where the stakes are much higher. Systems that read cues of feeling, character and intent are being used or tested to detect threats at border checkpoints, evaluate job candidates, monitor classrooms for boredom or disruption, and recognize signs of aggressive driving. Major automakers are putting the technology into coming generations of vehicles, and Amazon, Microsoft, Google and other tech companies offer cloud-based emotion-AI services, often bundled with facial recognition. Dozens of start-ups are rolling out applications to help companies make hiring decisions. The practice has become so common in South Korea, for instance, that job coaches often make their clients practice going through AI interviews.

AI systems use various kinds of data to generate insights into emotion and behavior. In addition to facial expressions, vocal intonation, body language and gait, they can analyze the content of spoken or written speech for affect and attitude. Some applications use the data they collect to probe not for emotions but for related insights, such as what kind of personality a person has and whether he or she is paying attention or poses a potential threat.

But critics warn that emotion AI’s reach exceeds its grasp in potentially hazardous ways. AI algorithms can be trained on data sets with embedded racial, ethnic and gender biases, which in turn can prejudice their evaluations—against, for example, nonwhite job applicants. “There’s this idea that we can off-load some of our cognitive processes on these systems,” says Lauren Rhue, an information systems scientist at the University of Maryland, who has studied racial bias in emotion AI. “That we can say, ‘Oh, this person has a demeanor that’s threatening’ based on them. That’s where we’re getting into a dangerous area.”

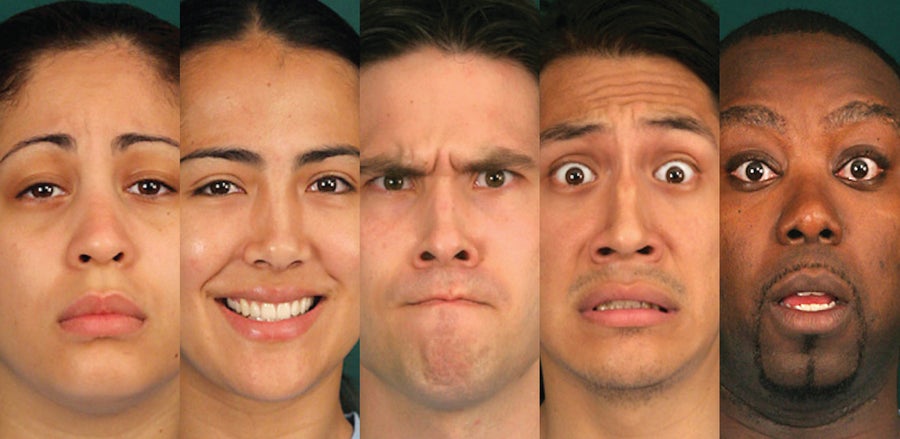

The underlying science is also in dispute. Many emotion-AI apps trace their origins to research conducted half a century ago by psychologists Paul Ekman and Wallace Friesen, who theorized that a handful of facial expressions correspond to basic emotions (anger, disgust, fear, happiness, sadness and surprise; Ekman later added contempt to the list) and that these expressions form a universally understood emotional language. But these ideas are now hotly debated. Scientists have found evidence of significant cultural and individual variations in facial expressions. Many researchers say algorithms cannot—yet, anyway—consistently read the subtleties of human expressions in different individuals, which may not match up with stereotypical internal feelings. Ekman himself, who worked to develop early forms of emotion-recognition technology, now argues it poses a serious threat to privacy and should be heavily regulated.

Emotion AI is not intrinsically bad. If machines can be trained to reliably interpret emotions and behavior, the potential for robotics, health care, automobiles, and other fields is enormous, experts say. But right now the field is practically a free-for-all, and a largely unproven technology could become ubiquitous before societies have time to consider the potential costs.

In 2018 Mark Gray, then vice president for people and business operations at Airtame, which makes a device for screen-sharing presentations and displays, was looking for ways to improve the company’s hiring process. Efficiency was part of it. Airtame is small, with about 100 employees spread among offices in Copenhagen, New York City, Los Angeles and Budapest, but the company can receive hundreds of applications for its jobs in marketing or design. Another factor was the capricious nature of hiring decisions. “A lot of times I feel it’s coming from a fake voice in the back of someone’s head that ‘oh, I like this person personally,’ not ‘this person would be more competent,’” says Gray, who is now at Proper, a Danish property management tech company. “In the world of recruitment and HR, which is filled with the intangible, I kind of wanted to figure out how can I add a tangible aspect to hiring.”

Inside out: Some emotion-AI systems rely on work by psychologist Paul Ekman. He argues universal facial expressions reveal feelings that include (from left) sadness, happiness, anger, fear and surprise. Credit: Paul Ekman

Airtame contracted with Retorio, a Munich-based company that uses AI in video interviews. The process is quick: job candidates record 60-second answers to just two or three questions. An algorithm then analyzes the facial expressions and voice of the interviewees and the text of their responses. It then generates a profile based on five basic personality traits, a common model in psychology shorthanded as OCEAN: openness to experience, conscientiousness, extraversion, agreeableness and neuroticism. Recruiters receive a ranked list of candidates based on how well each profile fits the job.

Such software is starting to change how business decisions are made and how organizations interact with people. It has reshaped the hiring process at Airtame, instantly elevating some candidates over others. Gray says that is because the profiling works. He shared a chart showing that the job performance of several recent hires in sales tracked their personality scores, with employees who had scored higher in conscientiousness, agreeableness and openness doing the best.

Machines that can understand emotions have long been the subject of science fiction. But in computer science and engineering, human affect remained an alien concept for a long time. As recently as the 1990s, “it was a taboo topic, something undesirable,” says Rosalind Picard of the Massachusetts Institute of Technology, who coined the term “affective computing” in a 1995 technical report. “People thought I was crazy, nuts, stupid, embarrassing. One respected signal- and speech-processing person came up to me, looked at my feet the whole time, and said, ‘You’re wasting your time—emotion is just noise.’”

Picard and other researchers began developing tools that could automatically read and respond to biometric information, from facial expressions to blood flow, that indicated emotional states. But the current proliferation of applications dates to the widening deployment starting in the early 2010s of deep learning, a powerful form of machine learning that employs neural networks, which are roughly modeled on biological brains. Deep learning improved the power and accuracy of AI algorithms to automate a few tasks that previously only people could do reliably: driving, facial recognition, and analyzing certain medical scans.

Yet such systems are still far from perfect, and emotion AI tackles a particularly formidable task. Algorithms are supposed to reflect a “ground truth” about the world: they should identify an apple as an apple, not as a peach. The “learning” in machine learning consists of repeatedly comparing raw data—often from images but also from video, audio, and other sources—to training data labeled with the desired feature. This is how the system learns to extract the underlying commonalities, such as the “appleness” from images of apples. Once the training is finished, an algorithm can identify apples in any image.

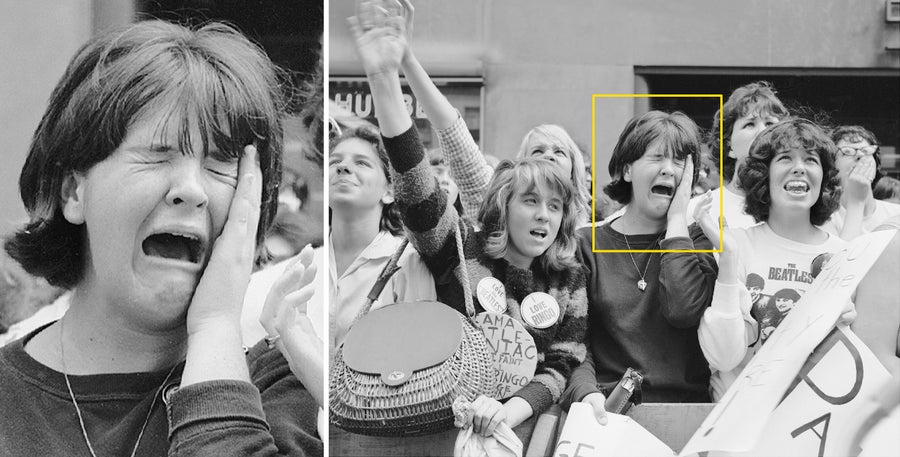

Context counts: A woman looks upset in a cropped photo from 1964 (left). But the complete image shows she is part of a joyous crowd (above). These are ecstatic Beatles fans outside the band's hotel in New York City. Credit: John Pedin NY Daily News Archive and Getty Images

But when the task is identifying hard-to-define qualities such as personality or emotion, ground truth becomes more elusive. What does “happiness” or “neuroticism” look like? Emotion-AI algorithms cannot directly intuit emotions, personality or intentions. Instead they are trained, through a kind of computational crowdsourcing, to mimic the judgments humans make about other humans. Critics say that process introduces too many subjective variables. “There is a profound slippage between what these things show us and what might be going on in somebody’s mind or emotional space,” says Kate Crawford of the University of Southern California Annenberg School for Communication and Journalism, who studies the social consequences of artificial intelligence. “That is the profound and dangerous leap that some of these technologies are making.”

The process that generates those judgments is complicated, and each stage has potential pitfalls. Deep learning, for example, is notoriously data-hungry. For emotion AI, it requires huge data sets that combine thousands or sometimes billions of human judgments—images of people labeled as “happy” or “smiling” by data workers, for instance. But algorithms can inadvertently “learn” the collective, systematic biases of the people who assembled the data. That bias may come from skewed demographics in training sets, unconscious attitudes of the labelers, or other sources.

Even identifying a smile is far from a straightforward task. A 2020 study by Carsten Schwemmer of the GESIS–Leibniz Institute for the Social Sciences in Cologne, Germany, and his colleagues ran pictures of members of Congress through cloud-based emotion-recognition apps by Amazon, Microsoft and Google. The scientists’ own review found 86 percent of men and 91 percent of women were smiling—but the apps were much more likely to find women smiling. Google Cloud Vision, for instance, applied the “smile” label to more than 90 percent of the women but to less than 25 percent of the men. The authors suggested gender bias might be present in the training data. They also wrote that in their own review of the images, ambiguity—ignored by the machines—was common: “Many facial expressions seemed borderline. Was that really a smile? Do smirks count? What if teeth are showing, but they do not seem happy?”

Facial-recognition systems, most also based on deep learning, have been widely criticized for bias. Researchers at the M.I.T. Media Lab, for instance, found these systems were less accurate when matching the identities of nonwhite, nonmale faces. Typically these errors arise from using training data sets that skew white and male. Identifying emotional expressions adds additional layers of complexity: these expressions are dynamic, and faces in posed photos can have subtle differences from those in spontaneous snapshots.

Rhue, the University of Maryland researcher, used a public data set of pictures of professional basketball players to test two emotion-recognition services, one from Microsoft and one from Face++, a facial-recognition company based in China. Both consistently ascribed more negative emotions to Black players than to white players, although each did it differently: Face++ saw Black players as angry twice as often as white players; Microsoft viewed Black players as showing contempt three times as often as white players when the expression was ambiguous. The problem can likely be traced back to bias in the labeled images in training data sets, she says. Microsoft and Face++ did not respond to requests for comment.

Many companies now emphasize that they are aware of and addressing such issues. Retorio’s algorithm was trained on a data set, compiled over a period of years using paid volunteers, of short interview videos labeled with personality traits, co-founder Christoph Hohenberger says. The company has taken steps to filter out various demographic and cultural biases that would tend to favor one group over another in the personality assessments, he says. But because there is currently no regulation or oversight of the industry, in most cases we have to take a company’s word for it—the robustness and equity of proprietary data sets are hard to verify. HireVue, a company that does video interviews with algorithmic analysis of the text and vocal tone, brought on an outside auditor to check for bias, but that is rare.

“This idea that there exists one standard for humans to be and that everyone can meet it equally” is fundamentally flawed, says Ifeoma Ajunwa, an associate professor at the University of North Carolina School of Law, who studies AI decision-making. The assumption, she says, means that “everyone who doesn’t meet that standard is disadvantaged.”

In addition to concerns about bias, the idea that outside appearances match a decipherable inner emotion for everyone has also started to generate strong scientific opposition. That is a change from when the concept got its start more than 50 years ago. At that time Ekman and Friesen were conducting fieldwork with the Fore, an Indigenous group in the highlands of southeast Papua New Guinea, to see if they recognized and understood facial expressions the same way as people from radically different backgrounds did—a stevedore from Brooklyn, say, or a nurse in Senegal. Volunteers were shown sets of photos of people making expressions for what the scientists called the six basic emotions. To provide context, a translator provided brief descriptors (“He/she is looking at something which smells bad” for disgust, for instance). The Fore responses were virtually identical to those of people surveyed in countries such as Japan or Brazil or the U.S., so the researchers contended that facial expressions are a universally intelligible emotional language.

The notion of a shared group of expressions that represented basic emotional states quickly became popular in psychology and other fields. Ekman and Friesen developed an atlas of thousands of facial movements to interpret these expressions, called the Facial Action Coding System (FACS). Both the atlas and the theory became cornerstones of emotion AI. The work has been incorporated into many AI applications, such those developed by the company Affectiva, which include in-car systems and market research.

But scientists have argued that there are holes in Ekman’s theories. A 2012 study published in the Proceedings of the National Academy of Sciences USA, for instance, presented data showing that facial expressions varied considerably by culture. And in 2019 Lisa Feldman Barrett, a psychologist at Northeastern University, along with several colleagues, published a study that examined more than 1,000 scientific papers on facial expressions. The notion that faces revealed outward signs of common emotions had spread to fields ranging from technology to law, they found—but there was little hard evidence that it was true.

The basic emotions are broad stereotypical categories, Barrett says. Moment to moment, facial expressions reflect complicated internal states—a smile might cover up pain, or it might convey sympathy. And today, she contends, it is almost impossible for an AI system to consistently, reliably categorize those internal states if it has been trained on data sets that are essentially collections of labeled stereotypes. “It’s measuring something and then inferring what it means psychologically,” Barrett says. “But those are two separate things. I can’t say this about every company obviously, because I don’t know everything that everybody is doing. But the emotion-recognition technology that’s been advertised is routinely confounding these two things.”

Gender bias: In a study using politicians' faces, researchers found that an emotion-AI program determined that only a few men were smiling. The scientists' own review, however, indicated the vast majority of men had a smile. In contrast to men, the program, Google Cloud Vision, applied the “smile” label to many women. Percentages on labels of attributes in two images (below) indicate the confidence the AI had in the label accuracy. The woman got a smile label at 64 percent confidence—along with labels focused on her hair—whereas the man did not get that label at all. Credit: “Diagnosing Gender Bias in Image Recognition Systems,” by Carsten Schwemmer et al., in Socius: Sociological Research for a Dynamic World, Vol. 6. Published online November 11, 2020 https://doi.org/10.1177/2378023120967171 (headshots with labels); Wikipedia (headshots)

One reason for this problem, Crawford says, is that the world of tech start-ups is not aware of scientific debates in other fields, and those start-ups are attracted to the elegant simplicity of systems such as FACS. “Why has the machine-learning field been drawn to Ekman?” Crawford asks. “It fits nicely with a machine-learning capacity. If you say there is a limited set of expressions and strictly limited numbers of potential emotions, then people will adopt that view primarily because the theory fits what the tools can do.” In addition to Ekman’s work and the personality-trait model of OCEAN, emotion-AI companies have adopted other systems. One is a “wheel of emotions” devised by the late psychologist Robert Plutchik, which is used by Adoreboard, a U.K.-based company that analyzes emotion in text. All these approaches offer to translate the complexity of human affect into straightforward formulas. They may suffer from similar flaws, too. One study found that OCEAN produces inconsistent results across different cultures.

Nevertheless, researchers say emotion apps can work—if their limitations are understood. Roboticist Ayanna Howard, dean of the College of Engineering at the Ohio State University, uses a modified version of Microsoft’s facial-expression-recognition software in robots to teach social behavior to children with autism. If a robot detects an “angry” expression from its interlocutor, for example, its movements will adapt in ways that calm the situation. The stereotypical facial expressions may not always mean exactly the same thing, Howard says, but they are useful. “Yeah, we’re unique—but we’re not that different from the person next door,” she says. “And so when you’re talking about emotion in general, you can get it right maybe not all the time but more than random right.”

In general, algorithms that scan and aggregate the reactions of many people—such as those Zenus uses to read crowds—will be more accurate, Barrett says, because “better than random” becomes statistically meaningful with a large group. But assessing individuals is more treacherous because anything short of 100 percent accuracy ends up discriminating against certain people.

Many computer vision specialists are now embracing a more agnostic view of facial expressions. (And more companies have begun stating they do not directly map emotions or internal states.) “As the field has developed, there’s increasing understanding that many expressions have nothing to do with emotion,” says Jonathan Gratch, a computer science professor at the University of Southern California, who specializes in affective computing. “They’re kind of tools we use to influence each other, or they’re almost like words in a conversation, and so there’s meaning in those words. But it is not direct access to what I’m feeling in the moment.”

Yet as attempts to map and monetize emotional expressions, personality traits and behaviors grow, they are expanding the parts of our lives that can fall under surveillance. After 20 years of tech companies mining personal data from online behavior, a new, more intimate domain—faces and bodies and the signals they send—is poised for similar treatment. “If you’re a Coca-Cola, and you’re driving a campaign, and your principal methodology for messaging is the Internet, you know everything about what audience you reached,” says Jay Hutton, CEO of Vancouver-based company VSBLTY, which markets smart cameras and software that scan crowds, analyzing demographics and reactions to products for retailers. “But what if we could take computer vision and turn bricks and mortar into that same level of analytics?”

In December 2020 VSBLTY announced a partnership with Mexican brewer Grupo Modelo to create in-store networks of cameras to capture data in the beverage company’s 50,000 Modelorama convenience stores and neighborhood bodegas in Mexico and other Latin American countries by 2027. Demand will exist wherever there are screens and advertising, Hutton says. The technology “will be used in transit hubs, or in an airport, or a stadium,” he says. “Advertisers are paying millions of dollars to be a sponsor, and their ads appear on screens throughout the stadium, [and] they are looking for validation of that spin.”

This trend raises a basic legal and social question: Do the data from your face and body belong to you? In most places around the world, the answer is no—as long as your personal identity is kept separate from that data. “If you would like to know, and somebody’s in public, there seems to be no limit in scanning them for their emotions,” says Jennifer Bard, a professor at the University of Cincinnati College of Law, who has studied the issue.

Most emotion-AI companies that capture data in public say the information is anonymized, and thus its collection should not provoke concern. VSBLTY does not store facial images or other data that can be linked to identities, Hutton says. Zenus’s Moutafis notes that his company’s app does not upload the actual facial images that its cameras capture—only the relevant metadata on mood and position—and that it puts up signs and notices on meeting screens that the monitoring is occurring. “Explicit consent is not needed,” he says. “We always tell people deploying it that is a very good practice; when you have a surveillance sensitivity, you have to put up a sign that these areas are being monitored.” Typically, Moutafis says, people do not mind and forget about the cameras. But the diversity of applications means there are no common standards. It is also far from clear whether people and politicians will embrace such routine surveillance once it becomes a political and policy issue.

Ekman, who earlier worked with the company Emotient and with Apple on emotion AI, now warns it poses a threat to privacy and says companies should be legally obligated to obtain consent from each person they scan. “Unfortunately, it is a technology that can be used without people’s knowledge, and it’s being used on them, and it’s not being used on them to make them happier,” he says. “It’s being used on them to get them to buy products they might not otherwise buy. And that’s probably the most benign of the nonbenign uses of it.”

Emotion AI has entered personal spaces, too, where the potential hoard of behavioral data is even richer. Amazon’s Alexa analyzes users’ vocal intonation for signs of frustration to improve its algorithms, according to a spokesperson. By 2023 some automakers will be debuting AI-enabled in-cabin systems that will generate huge amounts of data on driver and passenger behavior. Automakers will want those data, also likely anonymized, for purposes such as refining system responses and in-car design and for measuring aggregated behavior such as driver performance. (Tesla already collects data from multiple sources in its vehicles.) Customers would likely have the option of activating various levels of these systems, according to Modar Alaoui, CEO of emotion-AI company Eyeris, so if occupants do not use certain functions, data would not be collected on those. The in-cabin systems designed by Affectiva (recently acquired by Swedish firm Smart Eye) do not record video but would make metadata available, says chief marketing officer Gabi Zijderveld.

Aleix Martinez, a computer vision scientist at Ohio State and Amazon and a co-author with Barrett of the 2019 paper criticizing the face-emotion connection, has a photo he is fond of showing people. It is of a man’s face that appears to be twisted in a mixture of anger and fear. Then he shows the full image: it is a soccer player exultant after scoring a goal. Facial expressions, gestures, and other signals are not only a product of the body and brain, he notes, but of context, of what is happening around a person. So far that has proved the biggest challenge for emotion AI: interpreting ambiguous context. “Unless I know what soccer is, I’m never going to be able to understand what happened there,” Martinez says. “So that knowledge is fundamental, and we don’t have any AI system right now that can do a good job at that at all.”

The technology becomes more effective, Martinez says, if the task is narrow, the surroundings are simple, and the biometric information collected is diverse—voice, gestures, pulse, blood flow under the skin, and so on. Coming generations of emotion AI may combine exactly this kind of information. But that, in turn, will only create more powerful and intrusive technologies that societies may not be prepared for.